Introduction

Have you heard about ChatGPT and wondered what it is and how it works? ChatGPT is an example of a large language model, or LLM for short. LLMs are a revolutionary new type of artificial intelligence that can understand and generate human-like text.

Essentially, LLMs are giant AI systems that have been trained on massive amounts of text data from the internet – we’re talking billions of words from websites, books, and articles. By analyzing all this text, LLMs learn the patterns and intricacies of human language. This allows them to not only comprehend what we say to them, but also generate fluent responses that sound like they could have been written by a person.

What’s amazing about LLMs is their versatility. The same core language model can be adapted to perform all kinds of tasks just by giving it a few instructions and examples. Need help writing an essay? An LLM can do that. Want to analyze a legal contract? An LLM can assist. Interested in computer programming? An LLM can even generate code.

While the technology behind LLMs is highly complex, involving neural networks with billions of parameters, what’s important to understand is that LLMs represent a huge leap in making AI accessible and useful to the average person. You don’t need to be a computer scientist or AI expert to take advantage of their capabilities.

Large language models are a type of AI that uses deep learning techniques to process and understand vast amounts of text data. They are called “large” because they are trained on massive datasets, often containing billions of words from books, articles, and websites.

Exploring Large Language Models

In the following article, we’ll take a deep dive into the world of large language models. We’ll explore how they work, who the major players are, some of the most well-known LLMs like ChatGPT, and the wide range of applications they enable. Whether you’re a complete AI novice or have some technical background, this guide will give you a thorough understanding of one of the most exciting and impactful areas of artificial intelligence today. No computer science degree required!

What are Large Language Models?

At their core, large language models are a type of AI that uses deep learning techniques to process and understand vast amounts of text data. They are called “large” because they are trained on massive datasets, often containing billions of words from books, articles, and websites. This extensive training allows LLMs to learn the intricate patterns, grammar rules, and contextual meanings of human language.

The key innovation behind modern LLMs is the transformer architecture, introduced in 2017. Unlike previous language models that analyzed text sequentially, transformers can process words in parallel while still capturing their relationships and context. This breakthrough has enabled the creation of LLMs with unprecedented scale and capability.

How Do Large Language Models Work?

To understand how LLMs function, let’s walk through a simplified example. Imagine you provide an LLM with the prompt: “The capital of France is”. The model will analyze this phrase and, based on the patterns it has learned from its training data, predict the most likely next word or sequence of words. In this case, it would correctly output “Paris”.

But LLMs can do much more than fill in the blanks. By ingesting such vast quantities of text during training, they build a deep understanding of language that allows them to engage in complex linguistic tasks. For instance, an LLM can be prompted to write a news article, answer questions, translate between languages, summarize long documents, and even generate computer code.

Importantly, LLMs do not simply memorize and regurgitate text from their training data. Instead, they learn to recognize abstract concepts and relationships, enabling them to generate original content that is coherent and contextually relevant. It’s this ability to understand and produce language at a human-like level that makes LLMs so powerful and versatile.

Major Players in the Market

The development of large language models is largely driven by tech giants and well-funded AI research labs. Some of the key players include:

- OpenAI: Co-founded by Elon Musk and Sam Altman, OpenAI is a pioneer in LLM development. Their GPT series has set the standard for language AI.

- Google: Through initiatives like Google Brain and DeepMind, Google is heavily invested in advancing LLMs and integrating them into its products and services.

- Microsoft: A major force in the LLM space, Microsoft is collaborating with companies like OpenAI, NVIDIA, and Mistral AI to develop and deploy cutting-edge language models. Microsoft has integrated GPT models into offerings like Azure cloud services, Office suite, and Bing search.

- NVIDIA: As a leading provider of AI hardware and software, NVIDIA plays a crucial role in enabling the training and deployment of large language models.

- Anthropic: This AI safety startup, founded by former OpenAI researchers, is developing “constitutional AI” models that aim to be more controllable and aligned with human values.

Other notable companies working on LLMs include Meta (formerly Facebook), Huawei, Baidu, and AI21 Labs.

Products Using LLMs Available Online

While much of the development around large language models happens behind the scenes, several consumer-facing products are already harnessing their power:

- ChatGPT by OpenAI: This conversational AI chatbot, powered by GPT models, has taken the world by storm with its ability to engage in human-like dialogue and assist with a wide range of tasks.

- GitHub Copilot: Developed in collaboration with OpenAI, this AI pair programmer helps developers write code faster by suggesting completions based on context.

- Microsoft Copilot: Integrating GPT, Microsoft has made Copilot available online, aiming to revolutionize productivity with AI-powered writing assistance, data analysis, and more across its ecosystem.

- Gemini: Google’s chatbot, previously known as Bard, has been rebranded to Gemini and now runs on the company’s Gemini family of LLMs. Gemini models are multimodal, handling text, images, audio, and video

- Claude by Anthropic: Anthropic’s AI assistant, Claude, is a powerful LLM known for its strong reasoning capabilities, safety features, and ability to handle complex tasks across various domains.

- Mistral AI: Mistral AI offers open-source and commercial LLMs, including Mistral 7B and Mistral Large, which are designed for efficient real-time applications and excel in areas like mathematics, code generation, and reasoning.

Other notable LLMs include Jurassic-1 by AI21 Labs, Chinchilla by DeepMind, and BLOOM, a collaborative open-source effort. As LLMs continue to advance, we can expect to see even more innovative applications that bring the power of language AI to the masses.

Applications and Impact

The potential applications of large language models are vast and far-reaching. In the realm of customer service, LLMs are being used to create highly sophisticated chatbots that can engage in natural conversations and provide helpful information to users. This could greatly improve the efficiency and quality of customer support while reducing costs.

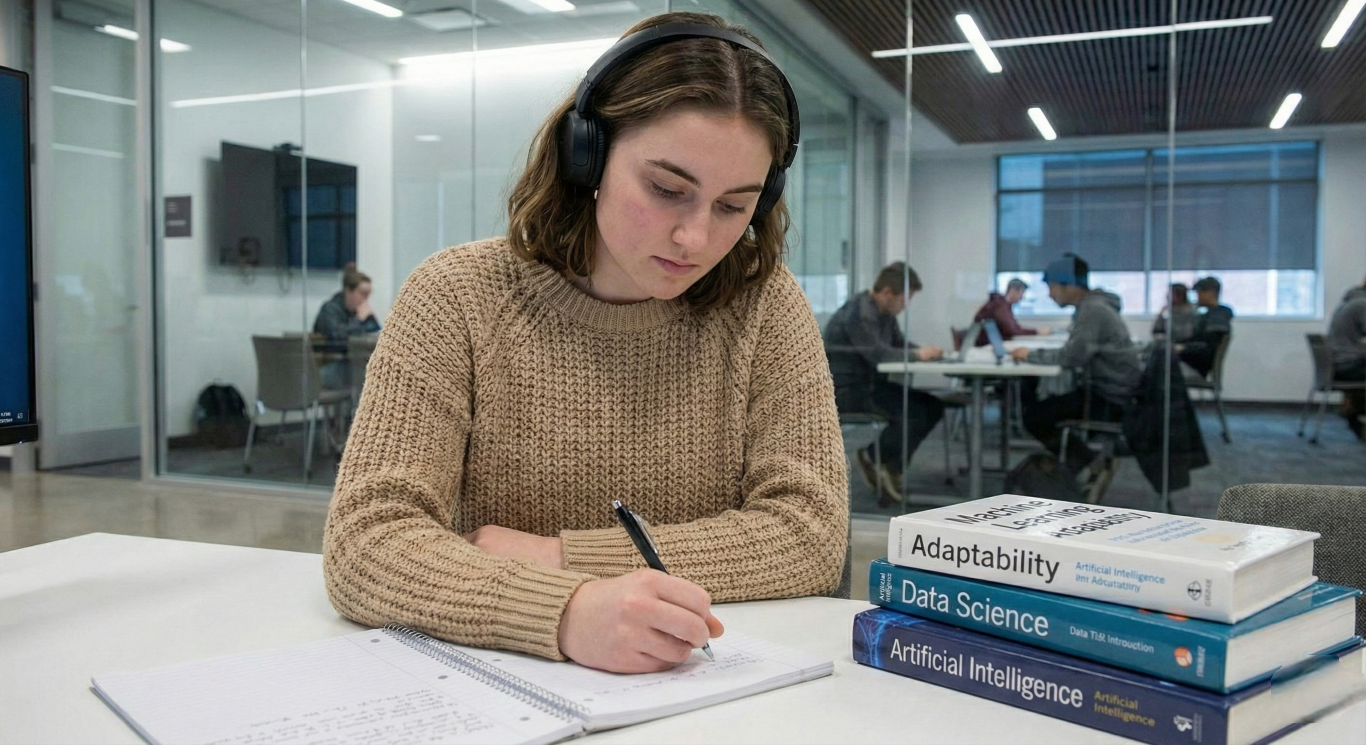

In education, LLMs have the potential to revolutionize personalized learning. Imagine an AI tutor that can adapt its teaching style and content to each student’s needs, providing targeted explanations, answering questions, and even generating customized study materials. This could help bridge educational gaps and make high-quality instruction more accessible.

LLMs are also poised to transform the way we interact with information. Search engines powered by LLMs could provide more direct and conversational answers to queries, making it easier for people to find what they’re looking for. Similarly, LLMs could be used to automatically generate summaries of long articles or reports, saving time and helping people stay informed.

In the creative industries, LLMs are already being used to assist with writing tasks, from generating ideas and outlines to drafting entire articles and stories. While human oversight and editing are still crucial, LLMs could significantly speed up the content creation process and open up new avenues for creative expression.

The Future of Large Language Models

The world of large language models (LLMs) is changing fast, and these changes are set to make them even more amazing. Not only are LLMs getting bigger, with some now using over a trillion bits of information, but they’re also getting smarter in different ways.

One of the coolest new developments is that LLMs are becoming multimodal. This means they can understand and work with not just text, but also images, sounds, and maybe even videos. Imagine asking a computer a question by showing it a picture, and it gives you an answer just like that!

Scientists are making these LLMs more powerful and efficient thanks to better computers and smarter ways of teaching them. And they’re not keeping these super-smart LLMs to themselves. Projects like GPT-Neo and BLOOM are sharing them so that anyone who’s curious can use them and make them even better.

These LLMs are also being made to work in special areas like health, money, and school subjects. This means they could help doctors, bankers, and teachers to do their jobs better.

With LLMs, we’re moving closer to creating AI that can chat and think like we do. They could change the way we live by making things like talking to customer service or learning new stuff in school way easier. But as we get excited about all this, we also have to make sure we use these powerful tools wisely and safely.

Challenges and Concerns

Despite their immense potential, large language models also raise important challenges and concerns that need to be addressed as the technology develops.

One major issue is bias. Because LLMs learn from human-generated text data, they can inadvertently pick up and amplify societal biases related to race, gender, and other sensitive attributes. Researchers and developers must work to identify and mitigate these biases to ensure that LLMs are fair and inclusive.

Another challenge is the potential for misuse. In the wrong hands, LLMs could be used to generate fake news, impersonate real people, or even create malicious software code. As LLMs become more powerful and accessible, it will be crucial to develop safeguards and regulations to prevent harmful applications.

There are also concerns about the environmental impact of training large language models, which requires enormous amounts of computational power and energy. Finding ways to make LLMs more efficient and sustainable will be an important area of research going forward.

Finally, as LLMs continue to advance, they raise profound questions about the nature of intelligence and creativity. Some worry that LLMs could eventually replace human writers and knowledge workers, leading to job losses and economic disruption. Others argue that LLMs will augment rather than replace human capabilities, leading to new forms of collaboration between people and machines.

Final Thoughts

Large language models represent a major breakthrough in artificial intelligence, with the potential to revolutionize the way we interact with computers and information. By leveraging deep learning techniques and massive datasets, LLMs can understand, interpret, and generate human-like text on a wide range of topics, opening up new possibilities for applications in customer service, education, content creation, and beyond.

However, the development of LLMs also raises important challenges and concerns, from mitigating bias and preventing misuse to ensuring efficiency and sustainability. As the technology continues to advance, it will be crucial for researchers, developers, and policymakers to work together to address these issues and ensure that LLMs are developed and deployed in a responsible and beneficial manner.

Despite these challenges, the potential benefits of large language models are immense. As the technology continues to evolve and mature, it has the power to transform the way we interact with computers, unlock new realms of creativity and knowledge, and help solve some of the world’s most pressing problems. The age of conversational AI has arrived, and large language models are leading the way.