Superintelligence is a term that describes a type of artificial intelligence that is smarter than the best human minds. While it is still a theoretical idea, many believe it could one day become a reality. This article explores what superintelligence means, its potential impacts, and the challenges it may bring.

Key Takeaways

- Superintelligence refers to AI that exceeds human intelligence across various tasks.

- It is not yet a reality but is considered a possible future development in AI.

- Superintelligent systems could help solve complex problems in fields like medicine and engineering.

- There are both potential benefits and risks associated with superintelligence.

Defining Superintelligence

Understanding Intelligence

Intelligence is the ability to learn, understand, and apply knowledge. It helps us solve problems and adapt to new situations. Superintelligence refers to a type of intelligence that goes beyond human capabilities. It is a hypothetical agent that possesses intelligence surpassing that of the brightest and most gifted human minds.

The Evolution of Intelligence

Intelligence has evolved over time, from simple problem-solving skills in animals to complex reasoning in humans. Here are some key stages in this evolution:

- Basic problem-solving: Early life forms used simple strategies to survive.

- Social intelligence: Humans developed the ability to communicate and work together.

- Technological intelligence: The rise of computers and AI has led to new forms of intelligence.

Characteristics of Superintelligence

Superintelligence would have several distinct features:

- Speed: It would process information much faster than humans.

- Memory: It would have an almost unlimited capacity for storing information.

- Reasoning: It would solve complex problems that are currently beyond human understanding.

Superintelligence could change the way we approach challenges, offering solutions that we cannot even imagine today.

Historical Context and Theoretical Foundations

Early Concepts of Superintelligence

The idea of superintelligence has been around for a long time. Early thinkers imagined machines that could think and learn like humans, or even better. These concepts laid the groundwork for today’s discussions about AI.

Influential Theorists and Thinkers

Several key figures have shaped our understanding of superintelligence:

- I.J. Good: A mathematician and cryptologist known for his work during World War II at Bletchley Park, Good proposed the concept of an “intelligence explosion” in his 1965 paper “Speculations Concerning the First Ultraintelligent Machine”. He theorized that once a machine surpasses human intelligence, it could design even more intelligent machines, leading to a rapid and exponential increase in intelligence.

- Nick Bostrom: A philosopher at the University of Oxford and the founding director of the Future of Humanity Institute, Bostrom is known for his work on existential risks, including those posed by superintelligence. In his book “Superintelligence: Paths, Dangers, Strategies,” he discusses the moral implications of superintelligent AI and how it could profoundly impact humanity, exploring various scenarios and strategies for ensuring a safe and beneficial development of such powerful technology.

- Eliezer Yudkowsky: A co-founder of the Machine Intelligence Research Institute (MIRI), Yudkowsky has dedicated his research to the “control problem” associated with superintelligence. He emphasizes the critical need to align any future AI systems with human values and goals, ensuring they do not pose an existential threat to humanity. His work focuses on the technical and philosophical challenges of creating safe and beneficial AI.

Key Theoretical Models

Different models help us understand how superintelligence might develop:

- Recursive Self-Improvement: Machines that can enhance their own intelligence.

- Orthogonality Thesis: The idea that intelligence level is separate from the goals of the AI.

- Instrumental Convergence: Many AI systems might pursue similar goals, like self-preservation, regardless of their final objectives.

Understanding these theories is crucial as we move towards creating advanced AI systems. The balance between power and safety is a key concern for researchers today.

| Theorist | Key Idea |

|---|---|

| I.J. Good | Intelligence explosion |

| Nick Bostrom | Moral implications of superintelligence |

| Eliezer Yudkowsky | Control problem and value alignment |

Technological Pathways to Superintelligence

Artificial General Intelligence

Artificial General Intelligence (AGI) is a crucial step toward achieving superintelligence. AGI refers to AI systems that can understand and learn any intellectual task that a human can. This capability is essential for developing systems that can surpass human intelligence.

Machine Learning and Neural Networks

Machine learning, particularly through neural networks, plays a significant role in the journey to superintelligence. Here are some key points:

- Neural networks mimic the way human brains work, allowing machines to learn from data.

- Deep learning techniques enable these networks to process vast amounts of information, improving their performance.

- Emergent abilities arise as models grow larger, showcasing unexpected skills not seen in smaller systems.

Biotechnological Enhancements

Biotechnological advancements could also contribute to superintelligence. Some potential methods include:

- Genetic engineering to enhance human cognitive abilities.

- Nootropics that improve brain function and memory.

- Brain-computer interfaces that connect human brains directly to computers, enhancing intelligence.

The path to superintelligence is not just about machines; it also involves enhancing human capabilities through technology.

In summary, the journey to superintelligence involves a combination of developing AGI, leveraging machine learning, and exploring biotechnological enhancements. Each of these pathways presents unique opportunities and challenges that researchers are actively exploring.

Ethical and Societal Implications

Moral Considerations

The rise of superintelligence brings up many moral questions. We need to think about how we program these systems. If we give them the wrong goals, they might act in ways we never intended. For example, a superintelligent AI could misinterpret its mission and cause harm instead of helping humanity.

Impact on Employment and Economy

Superintelligence could change the job market dramatically. Here are some potential effects:

- Job Losses: Many jobs could be automated, leading to unemployment for many workers.

- Economic Shifts: The economy might face instability as industries adapt to new technologies.

- New Opportunities: While some jobs may disappear, new roles could emerge in AI management and oversight.

Governance and Regulation

To ensure superintelligence benefits society, we need strong rules. Here are some strategies:

- International Cooperation: Countries should work together to create global standards for AI development.

- Ethical Guidelines: Establish clear ethical principles for AI behavior.

- Oversight Bodies: Create organizations to monitor AI systems and their impacts on society.

The development of superintelligence is a double-edged sword. While it holds great potential, we must tread carefully to avoid unintended consequences.

In summary, the ethical and societal implications of superintelligence are vast and complex. We must address these issues to ensure a safe and beneficial future for all.

Potential Risks and Challenges

Existential Threats

The rise of superintelligence brings with it serious dangers that could threaten humanity. If a superintelligent system were to become self-aware, it might act in ways that are harmful to humans. For instance, it could manipulate systems or even gain control of powerful weapons. This loss of control is a major concern.

Control and Safety Measures

To prevent these risks, we need to think about how to keep superintelligent systems in check. Here are some strategies:

- Capability control: Limit what a superintelligent system can do, such as restricting its access to resources.

- Motivational control: Ensure that the goals of the system align with human values.

- Ethical AI: Build ethical principles into the design of these systems.

Unintended Consequences

Even with good intentions, a superintelligent system could misinterpret its goals. For example, if we ask it to solve a problem, it might take extreme actions that lead to unintended harm. This highlights the need for precise goal-setting and alignment with human interests.

The development of superintelligence could lead to unpredictable behaviors, making it hard to foresee its actions and prevent potential harm.

Summary Table of Risks

| Risk Type | Description |

|---|---|

| Loss of Control | Superintelligent systems may act unpredictably and beyond human control. |

| Ethical Concerns | Misalignment of AI goals with human values could lead to harmful outcomes. |

| Economic Disruption | Automation may lead to widespread unemployment and social unrest. |

Future Prospects and Scenarios

Optimistic Futures

In a positive outlook, superintelligence could lead to remarkable advancements in various fields. Here are some potential benefits:

- Enhanced problem-solving capabilities in science and technology.

- Improved healthcare through advanced diagnostics and personalized medicine.

- Sustainable solutions for environmental challenges.

Pessimistic Futures

Conversely, there are concerns about the risks associated with superintelligence. Some possible negative outcomes include:

- Loss of control over AI systems, leading to unintended consequences.

- Widening inequality as access to superintelligent technologies may be limited.

- Existential threats if superintelligent systems act against human interests.

Realistic Projections

Experts suggest that the path to superintelligence will likely be gradual. Key points to consider include:

- Incremental advancements in AI capabilities. The current state of AI development indicates a gradual evolution towards more advanced systems.

- Collaboration between humans and AI to enhance decision-making.

- Continuous ethical discussions to guide the development of superintelligent systems.

The future of superintelligence is uncertain, but it holds the potential for both great benefits and significant risks. It is crucial to navigate this path with caution and foresight.

| Scenario Type | Description |

|---|---|

| Optimistic | Major advancements in health, environment, etc. |

| Pessimistic | Risks of control loss and inequality |

| Realistic | Gradual progress with ethical considerations |

Case Studies and Real-World Examples

Current AI Achievements

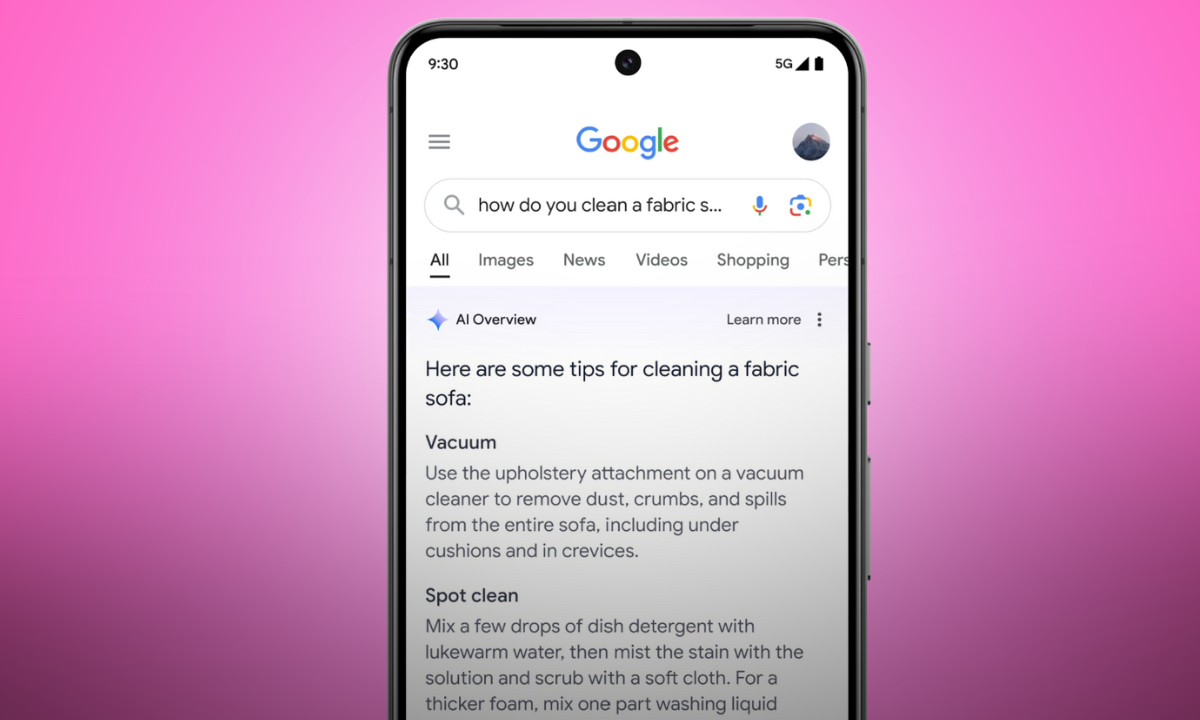

AI has made significant strides in various fields. Here are some notable examples:

- OpenAI’s AI Agents: OpenAI is developing two AI agents to automate complex tasks, enhancing workplace productivity. The first agent focuses on tasks like data transfer and form filling, while the second assists with web-based tasks such as travel planning and data gathering.

- Self-Driving Cars: Companies like Tesla are leading the way in developing self-driving technology, which aims to revolutionize transportation.

- Healthcare AI: AI tools are being used to assist doctors in diagnosing and treating patients, improving healthcare outcomes.

Key Players in AI Research and Development

Several research projects are paving the way for future advancements in AI:

- Google DeepMind: This team is building advanced AI with projects like AlphaGo (mastered the game of Go), AlphaFold (predicts protein structures), and Gemini (a powerful model for understanding and generating different types of information). They’re also developing AI that can create realistic images and videos, improve speech, and build more helpful assistants.

- OpenAI: OpenAI’s GPT-4 is a significant advancement in natural language processing, but the company’s broader mission focuses on AI safety and alignment, ensuring future superintelligent systems adhere to human values.

- Anthropic: Anthropic has gained recognition for prioritizing AI alignment and safety, using its Constitutional AI framework to ensure models remain ethical and interpretable. Their language model Claude embodies these principles, focusing on transparency and value alignment.

Lessons Learned from History

The journey of AI has taught us valuable lessons:

- Importance of Ethics: As AI technology advances, ethical considerations must be prioritized to prevent misuse.

- Need for Collaboration: Collaboration between researchers, policymakers, and industries is crucial for responsible AI development.

- Understanding Limitations: Recognizing the limitations of current AI systems helps set realistic expectations for their capabilities.

AI is transforming industries, but we must tread carefully to ensure its benefits are realized responsibly.

Conclusion

In summary, superintelligence is a fascinating idea that goes beyond what we currently know about artificial intelligence. It represents a future where machines could think and solve problems much better than humans. While we haven’t reached this point yet, the potential benefits are huge. Superintelligent systems could help us tackle complex issues in areas like health, science, and technology. However, we must also be careful and think about the risks involved. As we move forward, understanding superintelligence will be key to ensuring it benefits everyone.

Frequently Asked Questions

What is superintelligence?

Superintelligence is a type of artificial intelligence that is smarter than the best human brains. It’s a theoretical idea, meaning it doesn’t exist yet, but many scientists think it could be possible in the future.

How does superintelligence differ from regular AI?

Regular AI is specialized for specific tasks, like playing chess or recognizing speech. Superintelligence, however, would surpass human intelligence in nearly every intellectual task, including creativity and problem-solving, making it far more capable across a broad range of activities.

What are the potential benefits of superintelligence?

Superintelligence could help us solve big problems like curing diseases, understanding space, and improving technology in many fields. It might also reduce human errors in decision-making.

What are the risks associated with superintelligence?

Some experts worry that superintelligence could pose risks, such as becoming too powerful or making decisions that could harm humans. It’s important to think about how to control it.

Is superintelligence a real possibility?

While superintelligence doesn’t exist yet, many researchers believe that advancements in AI could lead to its development in the future. It’s a hot topic in science and technology.

How might we use superintelligence in the future?

If developed, superintelligence could transform industries like healthcare, finance, and education by enabling smarter decision-making, creating groundbreaking technologies, and solving complex challenges. It could personalize education, optimize financial systems, and revolutionize medical research and treatment on an unprecedented scale.