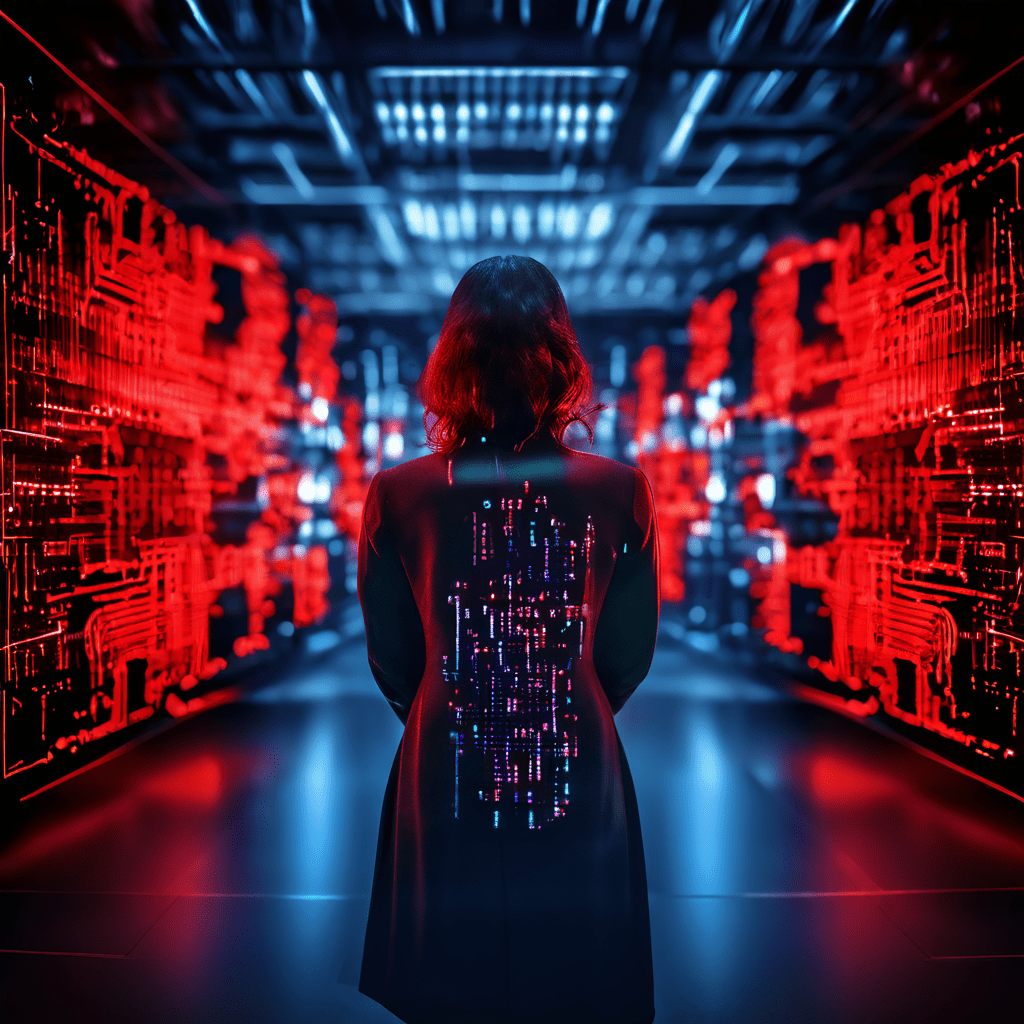

In the rapidly evolving world of artificial intelligence (AI) and machine learning (ML), a new cybersecurity threat has emerged, known as data poisoning. This attack vector targets the integrity and reliability of generative AI models, potentially leading to inaccurate, discriminatory, or inappropriate outputs. As AI algorithms are increasingly integrated into various industries and applications, understanding the mechanics and implications of data poisoning attacks is crucial for safeguarding these advanced systems.

In the rapidly evolving world of artificial intelligence (AI) and machine learning (ML), a new cybersecurity threat has emerged, known as data poisoning.

What is Data Poisoning in AI?

Data poisoning, also referred to as data corruption or data pollution, occurs when an attacker deliberately manipulates the training data of an AI model, causing it to behave in an undesirable manner. By injecting false or misleading information, modifying existing datasets, or deleting portions of the data, adversaries can introduce biases, create erroneous outputs, or embed vulnerabilities within the AI tool. This type of cyberattack falls under the category of adversarial AI, which encompasses activities aimed at undermining the performance of AI/ML systems through manipulation or deception.

Execution Strategies

To execute a data poisoning attack, threat actors require access to the underlying data. The approach varies depending on whether the dataset is private or public. In the case of a private dataset, the attacker could be an insider or a hacker with unauthorized access. They might opt for a targeted attack, poisoning only a small subset of data to avoid detection while causing the AI tool to malfunction when referencing the corrupted data.

For public datasets, poisoning typically necessitates a coordinated effort. Tools like Nightshade enable artists to insert subtle changes into their artwork, confusing AI tools that scrape the content without permission. These alterations can lead the AI to generate incorrect images, effectively poisoning the tool’s dataset and potentially undermining user trust.

Far-Reaching Implications Across Industries

The implications of data poisoning are far-reaching, with potential impacts on finance, healthcare, and other critical sectors. For instance, a poisoned AI model designed to recognize suspicious emails could allow phishing or ransomware to bypass filters undetected. The consequences of such breaches could be costly and even life-threatening, depending on the application.

Staying Ahead of the Threat

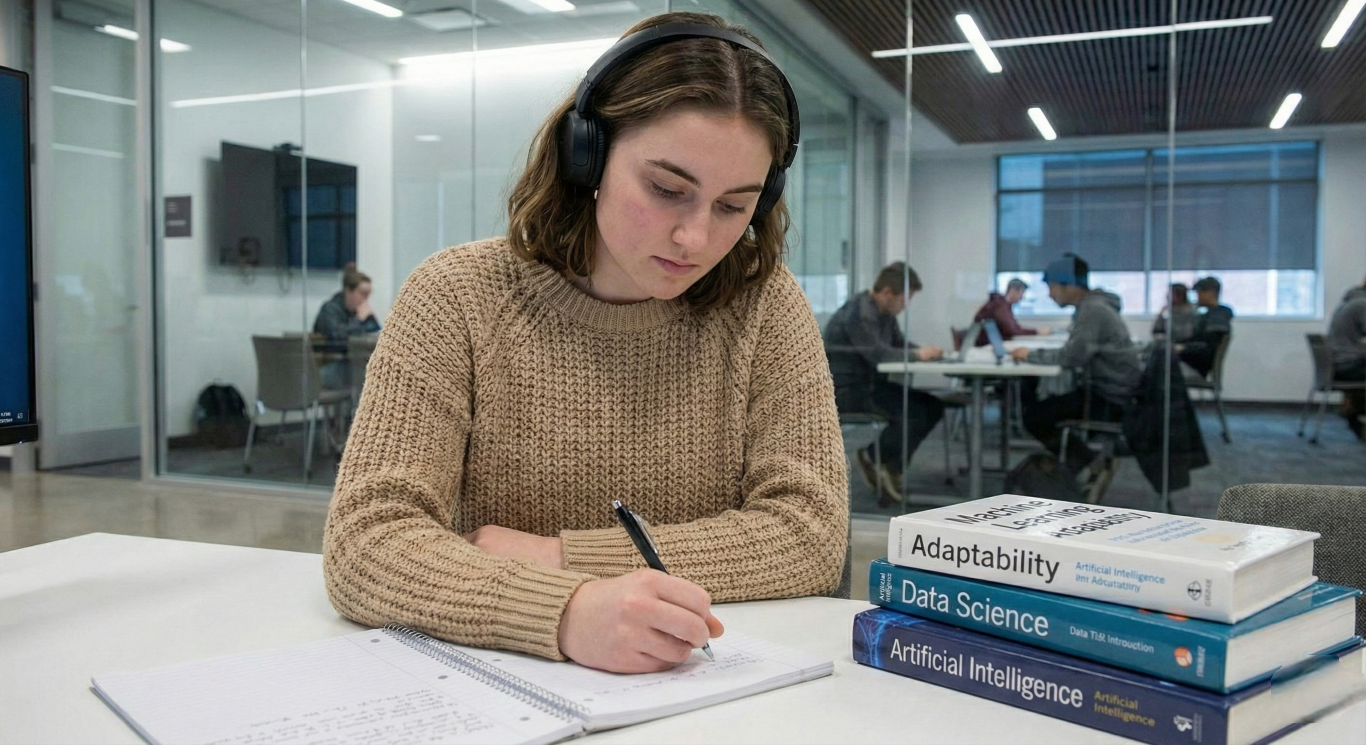

As AI and ML continue to advance, the threat of data poisoning attacks grows. Organizations must remain vigilant, implementing robust security measures to protect their AI systems. Regular monitoring, data validation, and anomaly detection are essential practices to identify and thwart data poisoning attempts. Additionally, the AI community must continue to innovate and develop new defenses against these sophisticated cyber threats.

Final Thoughts

In summary, data poisoning is a significant cybersecurity threat that can compromise the effectiveness and trustworthiness of AI models. Understanding the nature of these attacks and implementing strong preventive measures is crucial for maintaining the security and integrity of AI systems across various industries. As AI continues to shape our world, staying ahead of threats like data poisoning will be paramount for ensuring the safe and ethical use of this transformative technology.